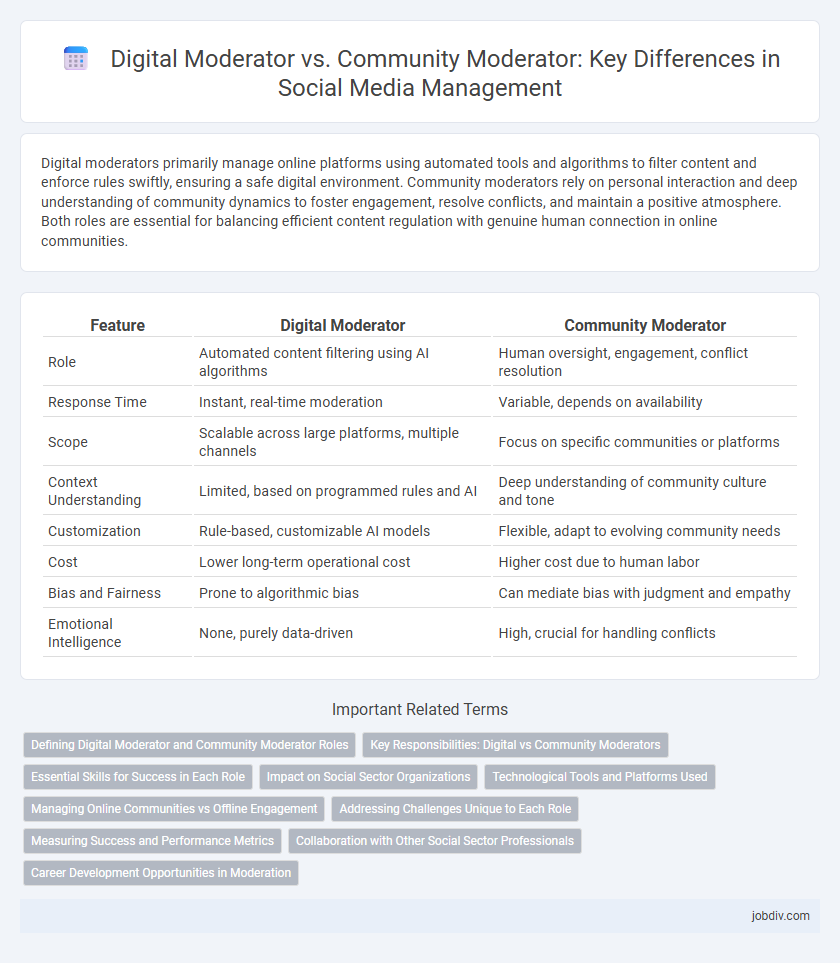

Digital moderators primarily manage online platforms using automated tools and algorithms to filter content and enforce rules swiftly, ensuring a safe digital environment. Community moderators rely on personal interaction and deep understanding of community dynamics to foster engagement, resolve conflicts, and maintain a positive atmosphere. Both roles are essential for balancing efficient content regulation with genuine human connection in online communities.

Table of Comparison

| Feature | Digital Moderator | Community Moderator |

|---|---|---|

| Role | Automated content filtering using AI algorithms | Human oversight, engagement, conflict resolution |

| Response Time | Instant, real-time moderation | Variable, depends on availability |

| Scope | Scalable across large platforms, multiple channels | Focus on specific communities or platforms |

| Context Understanding | Limited, based on programmed rules and AI | Deep understanding of community culture and tone |

| Customization | Rule-based, customizable AI models | Flexible, adapt to evolving community needs |

| Cost | Lower long-term operational cost | Higher cost due to human labor |

| Bias and Fairness | Prone to algorithmic bias | Can mediate bias with judgment and empathy |

| Emotional Intelligence | None, purely data-driven | High, crucial for handling conflicts |

Defining Digital Moderator and Community Moderator Roles

Digital moderators primarily manage online content by monitoring language, enforcing platform policies, and using automated tools to filter inappropriate material in social media, forums, and digital platforms. Community moderators focus on fostering positive engagement, building trust, and facilitating conversations within specific user groups or communities by addressing member concerns and encouraging collaboration. Both roles are essential for maintaining safe and vibrant online environments but differ in scope, with digital moderators emphasizing content regulation and community moderators prioritizing interpersonal dynamics.

Key Responsibilities: Digital vs Community Moderators

Digital moderators primarily manage online content by filtering inappropriate posts, enforcing platform policies, and ensuring digital engagement aligns with community standards. Community moderators focus on fostering member interactions, facilitating discussions, resolving conflicts, and building a supportive environment within the community. Both roles require vigilance and communication skills but differ in their emphasis on content control versus community relationship management.

Essential Skills for Success in Each Role

Digital moderators excel in technical proficiency, content filtering, and real-time conflict resolution on diverse platforms, ensuring seamless user interaction. Community moderators prioritize interpersonal communication, empathy, and cultural awareness to foster positive engagement and build trust within tight-knit groups. Both roles demand adaptability, quick decision-making, and an in-depth understanding of platform-specific guidelines to maintain safe and inclusive online environments.

Impact on Social Sector Organizations

Digital moderators utilize AI tools and automated systems to efficiently manage large online communities, enhancing timely content filtering and user engagement in social sector organizations. Community moderators rely on human judgment to foster trust, empathy, and meaningful interactions, crucial for sensitive social issues and volunteer-driven initiatives. Combining digital moderation with community moderation maximizes impact by balancing scalability with personalized support in social sector platforms.

Technological Tools and Platforms Used

Digital moderators leverage AI-powered tools such as automated content filtering systems, sentiment analysis software, and real-time monitoring dashboards to manage large online communities efficiently. Community moderators primarily use platform-specific moderation features like user reporting, post approval, and manual intervention tools within social networks or forum software. The integration of machine learning algorithms enhances digital moderators' capabilities to detect policy violations and harmful content more rapidly than traditional community moderation methods.

Managing Online Communities vs Offline Engagement

Digital moderators specialize in managing online communities by enforcing platform-specific guidelines, monitoring user interactions, and resolving conflicts in real-time across social media, forums, and virtual events. Community moderators focus on offline engagement, organizing in-person gatherings, facilitating face-to-face discussions, and fostering local network connections to strengthen community bonds. Both roles require strong communication skills and conflict resolution abilities but differ in their environments and tools used for interaction management.

Addressing Challenges Unique to Each Role

Digital moderators manage automated systems and algorithms to filter online content and detect spam or harmful behavior, requiring technical skills to optimize AI tools. Community moderators focus on fostering engagement and resolving conflicts by understanding group dynamics and cultural nuances within the community. Both roles demand strong communication and problem-solving abilities but address distinct challenges shaped by digital automation versus human interaction.

Measuring Success and Performance Metrics

Measuring success for digital moderators involves tracking response time, resolution rates, and sentiment analysis to ensure effective management of online interactions. Community moderators' performance is assessed through member engagement levels, rule compliance rates, and the ability to foster positive, inclusive environments. Both roles benefit from data-driven insights to optimize moderation strategies and enhance community satisfaction.

Collaboration with Other Social Sector Professionals

Digital moderators leverage advanced tools to collaborate efficiently with social workers, mental health professionals, and law enforcement for timely intervention and support. Community moderators build grassroots connections, ensuring culturally sensitive communication and local resource sharing within social service networks. Both roles facilitate interdisciplinary cooperation to enhance community well-being and crisis response effectiveness.

Career Development Opportunities in Moderation

Digital moderators often gain experience in content management tools and data analytics, enhancing their technical skills valuable for career advancement in tech-driven roles. Community moderators develop deep understanding of group dynamics and conflict resolution, positioning them for leadership roles in social engagement and community management. Both paths offer growth opportunities, with digital moderation leading towards digital marketing or product management, while community moderation can advance toward social strategy or public relations careers.

Digital Moderator vs Community Moderator Infographic

jobdiv.com

jobdiv.com